Abstract

Many human-related activities show power-law decaying interevent time distribution with exponents usually varying between 1 and 2. We study a simple task-queuing model, which produces bursty time series due to the non-trivial dynamics of the task list. The model is characterized by a priority distribution as an input parameter, which describes the choice procedure from the list. We give exact results on the asymptotic behaviour of the model and we show that the interevent time distribution is power-law decaying for any kind of input distributions that remain normalizable in the infinite list limit, with exponents tunable between 1 and 2. The model satisfies a scaling law between the exponents of interevent time distribution (β) and autocorrelation function (α): α + β = 2. This law is general for renewal processes with power-law decaying interevent time distribution. We conclude that slowly decaying autocorrelation function indicates long-range dependence only if the scaling law is violated.

Content from this work may be used under the terms of the Creative Commons Attribution 3.0 licence. Any further distribution of this work must maintain attribution to the author(s) and the title of the work, journal citation and DOI.

1. Introduction

Studying human activity patterns is of central interest due to the wide practical usage. Understanding the dynamics underlying the timing of various human activities—such as starting a phone call, sending an e-mail or visiting a website—are crucial to modelling the spreading of information or viruses [1]. Modelling human dynamics is also important in resource allocation. It has been shown that for many human activities the interevent time distribution follows a power law with exponents usually varying between 1 and 2. Processes with power-law decaying interevent time distribution look very different from the Poisson process, which has been used to model the timing of human activities before [2, 3]. While time series from the latter look rather homogeneous, the former processes produce bursts of rapidly occurring events, which are separated by long inactive periods.

Some examples where power-law decaying interevent time distribution has been observed are email-communication (with exponent β ≈ 1, [4]), surface mail communication (β ≈ 1.5, [5]), web-browsing (β ≈ 1.2, [6]) and library loans (β ≈ 1, [7]). In some other cases a monotonous relation has been reported between the user activity and the interevent time exponent, for example in short-message communication (β∈(1.5,2.1), [8]) or in watching movies (β∈(1.5,2.7), [9]). In a recent paper there can be found a distribution of exponents of various channels of communications [10]. These observations make it necessary to find a model in which the interevent time exponent is tunable.

Similar bursty behaviour has been observed in other natural phenomena, for example in neuron-firing sequences [11] or in the timings of earthquakes [12]. The interevent time distribution does not give us any information about the dependence among the consecutive actions. Correlation between events is usually characterized by the autocorrelation function of the timings of detected events. Bursty behaviour is often accompanied by power-law decaying autocorrelation function [13], which is usually thought to indicate long-range dependence, see e.g. [14]. However, time series with independent power-law distributed interevent times show long-range correlations [15, 16].

This paper is organized as follows. We start with introducing a task-queuing model, which has an advantage compared to the Barabási-model [17], namely that the observable is not the waiting time of an action (from adding the task to the list until executing it) but the interevent time between similar activities. We determine the asymptotic decay of the interevent time distribution in a simple limit of the model. We give a simple proof of the scaling law between the exponents of the interevent time distribution and the autocorrelation function based on Tauberian theorems. Finally, we demonstrate that the scaling law can be violated if the interevent times are long-range dependent.

2. The model

We assume that people have a task list of size N and they choose their forthcoming activity from this list. The list is ordered in the sense that the probability wi of choosing the ith activity from the list is decreasing as a function of position i. The task at the chosen position jumps to the front (triggering the corresponding activity) and pushes the tasks that preceded it to the right (figure 1). This mechanism is responsible for producing the bursty behaviour because once a person starts to do an activity, that is going to have high priority for a while.

Figure 1. Dynamics of the list. In every timestep a random position is chosen from the list and the task at the chosen position jumps to the first position initiating the corresponding type of activity. The other tasks are shifted to fill in the gap.

Download figure:

Standard image High-resolution imageThe model is capable of covering many types of activities but now we only concentrate on one type. The tasks of this type are marked with A in figure 1 (e.g. watching movies). For the sake of simplicity we assume that the list contains only one single item of type A, all the others are activities of type B. It turns out that this restriction is important for the interevent time distribution but irrelevant for the autocorrelation function.

We show in our paper that this model produces power-law decaying interevent time distribution for a wide variety of the wi distributions. First we analyse the model with power-law decaying priority distribution wi, second we analyse the effect of exponentially decaying priority distribution and finally we discuss the stretched exponential case. If the list is finite, the power-law regime is followed by an exponential cutoff. The cutoff is the consequence of reaching the end of the list, from which a geometrically distributed waiting time follows: Pwt(t) ∼ (1 − wN)t. A marginal result of section 4 shows that the expectation value of the interevent time is equal to the length of the list independently on the priority distribution (as long as ergodicity is maintained). For the sake of simplicity we determine the exponent of power-law decaying region in the case of an infinitely long list where the exponential cutoff does not appear.

3. Interevent time distribution

The interevent time is equal to the recurrence time of the first element of the list. Let q(n,t) (n ⩾ 1) denote the probability of finding the observed element at position n after t timesteps without any recurrences up to time t. We emphasize here that q(n,t) is not a conditional probability and that gives the survival function of the interevent time distribution. The initial condition is set as q(n,1) = (1 − w1)δn,2, that is, at the first step the observed element moves from the front of the list to the second position with probability (1 − w1) (otherwise a recurrence would occur). The restriction not to recur is important because this makes large jumps to the front of the list forbidden for the observed element. Time evolution is given by

where is the survival function of the priority distribution. Equation (2) expresses that the observed element can be found at position n either if it was there in the previous time step and we choose a position smaller than n or it was at position n − 1 and we choose an activity at position k > n. Our aim is to determine the asymptotic behaviour of . The main trick we use in analysing the recursion above is to consider the n = const levels first instead of the t = const levels which intuitively one would do. At every step the time coordinate gets increased while the position of the observed element might remain unchanged or get increased by one as well (figure 2). The path of the element in the (n,t) plane can be divided into sections that start with a step on the bias and are followed by some steps upwards (which can be zero as well). These sections can be characterized by their height-difference, which can take values from . These height differences are independent and (optimistic) geometrically distributed with parameter depending on the position. Let ξk be independent geometrically distributed random variables with parameter Qk, i.e. . With n fixed q(n,t) is the probability that we find the element at the nth position at time t (without any recurrences). This corresponds to paths with n − 1 steps to the right (started from position 1 at time 0) and q(n,t) is the distribution mass function of the sum of the random heights:

We could go so far without specifying the priority distribution, now we have to specify Qn to continue.

Figure 2. Some examples for the path in the (n,t) plane. Some typical sections in the path corresponding to the ξk random variable are emphasized by a rectangle.

Download figure:

Standard image High-resolution image3.1. Power-law decaying priorities

Here we analyse the model in which the survival function of the priority distribution is power-law decaying, i.e. . means that term is asymptotically at most of the order of nω. In this case wi is also power-law decaying with exponent σ. Though the random variables ξk are not identically distributed—by checking the Lyapunov condition—one can show that the central limit theorem holds for this situation (see section A.2 of the appendix). In this approximation q(n,t) is Gaussian in variable t with mean and variance , where and . Using integral approximation to evaluate the sums and keeping only the highest order terms in n yields

This formula shows that the probability of finding an element at position n at time t is centred on the curve which is in agreement with the numerical results (figure 3).

Figure 3. Contour plots of q(n,t). The image on the left is a numerical result calculated directly form the recursion equations (1) and (2), the right plot shows the CLT approximation (4). The dashed curve is (c = 1).

Download figure:

Standard image High-resolution imageThe sum of q(n,t) in variable n is the probability of the observed element has not recurred up to time t. We approximate this sum by integral and we apply the following substitution (with new variable r):

For γ > 0 (here γ may refer to σ − 1/2,σ,2σ − 1):

where o(nω) means that term is asymptotically of strictly smaller order than nω. From equations (4)–(6) it follows that

Differentiating this equation gives the first main result of our paper, Pie(t) ∼ t−β with .

3.2. Exponentially decaying priorities

Now we study the model with Qn = ce−λn priority survival function. In contrast to the previous case the central limit theorem cannot be used, because the last term in is comparable with the complete sum.

However, we can construct a limit theorem even for this situation. First of all, we approximate the geometrically distributed random variables ξk ∼ Geom(1 − Qk) by exponential ones: . We apply the scaling property of the exponential distribution to express by i.i.d. exponential random variables: , where ηk ∼ Exp(c). In equation (3) we need the distribution of sums of the first n − 1 ξ:

Xn has a well defined limit as n → ∞, which we denote by X. The probability density function of this (limit) random variable is denoted by ψ(x) (and is given explicitly in section A.1 of the appendix). The finite sum Xn in equation (8) can be approximated by X, yielding and

since tends to 1 as t → ∞. By differentiating we get β = 2 independently on parameter λ.

3.3. Stretched exponentially decaying priorities

The last investigated priority distribution is the stretched exponential, i.e. Qn = c e−λnν .

Faster than exponential decay (ν > 1).

In this case is totally dominated by the last term in the sum, hence we approximate the sum with this term

This means that the β = 2 exponent holds for this case but a logarithmic correction appears.

Slower than exponential decay (ν < 1).

In this case the increment of Qn is just small enough for the central limit theorem to be still applicable (see the appendix). We approximate the mean and variance of by integral and we use the property . With these we get

The t → ∞ asymptotic behaviour of can be determined by introducing a proper new variable

Keeping the leading order term only, one finds that the survival function of the interevent time distribution is

For more details of the calculations see section A.3 of the appendix. We have got β = 2 with logarithmic correction again. While for ν > 1 the correction makes the decay of the distribution faster than the pure power law, for ν < 1 it is slightly slowed down.

4. Autocorrelation function

Another characteristic property of the time series is autocorrelation function. Let X(t) denote the indicator variable of the observed activity: X(t) = 1 if the observed activity is at the first position of the list at time t (i.e. an event happened) and X(t) = 0 otherwise. We define the autocorrelation function as usual,

The model defines a Markov-chain and the state space is the space of permutations of the list. The transition matrix for a list of length N is given by

where QN = 0. This matrix is doubly stochastic, hence . According to the recurrence formula of Marc Kac [18] we conclude that the expectation value of the interevent time is equal to the size of the list independently on the priority distribution (as long as ergodicity is maintained). The autocorrelation function can be written in simpler form making use of the knowledge of ,

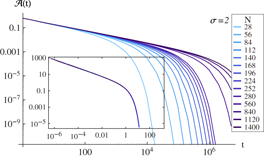

The probability of finding the activity at the first position can be calculated numerically by successive application of the Markov-chain transition matrix (18). For power-law decaying priority distributions numerical computations show that the autocorrelation function is power-law decaying with an exponential cutoff (figure 4). Given σ, the autocorrelation functions for various list sizes can be rescaled to collapse into a single curve (figure 4, inset). This property can be written in a mathematical way:

The exponents used for rescaling the autocorrelation functions are listed in table 1. Indicating that in the N → ∞ limit the autocorrelation function is power-law decaying with exponent for σ ⩾ 2. With the exact result this can be combined into a scaling law: α + β = 2.

Figure 4. Autocorrelation function of the model with various list sizes (with power-law decaying priority distribution). Inset: the curves corresponding to different N's collapse into a single curve if NδA(t) is plotted as a function of t/Nγ.

Download figure:

Standard image High-resolution imageTable 1. Numerical results for data collapse in figure 4: scaling parameters γ and δ for various values of σ.

| σ | 0.5 | 0.8 | 1 | 1.5 | 1.8 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|---|---|---|---|

| γ | 1.07 | 1.15 | 1.17 | 1.57 | 1.8 | 2 | 3 | 4 | 5 |

| δ | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

If the priority distribution decays faster than power-law, then β = 2 and α = 0. This means that the autocorrelation function decays slower than any power. This is the case when the priority distribution decays like a (stretched) exponential function.

5. Proof of the scaling law

The essential properties of the model for the scaling relation are that the interevent times are independent and power-law decaying. Let T denote the set of recurrence times and let τ be an interevent time. The autocorrelation function can be written in the following form:

which simplifies to if β ⩽ 2. The Laplace transform of the autocorrelation function can be expressed by the Laplace transform of the interevent time distribution

where we used the property that the interevent times are independent and identically distributed. Tauberian and Abelian theorems connect the asymptotics of a function with the asymptotics of its Laplace transform [20]. Applying Abelian theorem to the right side of the last equation results in g(λ) ∼ λ1−β. Then applying the Tauberian theorem yields or

To be precise we had to use an extended version of the Abelian theorem, which can be derived from the original theorem using integration by parts. With similar train of thought the scaling law can be extended to the 2 < β < 3 region where β − α = 2 holds. These results are in agreement with [16, 19].

6. Models with non-independent interevent time distribution

Independence of the interevent times was important in the proof of the scaling law. The violation of the scaling relation indicates dependence among the interevent times but we emphasize that the opposite direction does not necessarily hold. In this section we investigate two models that are characterized by dependent interevent times. One of them satisfies the scaling relation (23) and the other does not.

6.1. A model with weak dependence

Our first example is the two-state model of [13], because that model was introduced to capture deep correlations, nevertheless, it obeys the scaling law. We start with a brief introduction of the model. The system alternates between a normal state A, in which the events are separated by longer time intervals, and an excited state B, which performs events with higher frequency. After each event executed in state A the system may switch to state B with probability π or remain in state A with probability 1 − π. In contrast, the excited state has a memory. That is, if the system had executed more events in state B since the last event in the normal state, the transition to state A would be less probable. The probability of performing one more event in state B is given by , where n is the number of events in the current excited state. This memory function was introduced to model the empirically observed power-law decay in the bursty event number distribution (for the definition see the reference). The dynamics is illustrated in figure 5(a) for a better understanding. In both states the interevent times are generated from a reinforcement process resulting independent random interevent times τA and τB with power-law decaying distributions. We denote the corresponding exponents by βA and βB. The parameters of the model were the following: π = 0.1, ν = 2.0, βA = 1.3, βB = 5.0, and it was found that the model satisfies the scaling law with exponents β = 1.3 and α = 0.7.

Figure 5. (a) Schematics of the two-state model. (b) Time series generated by the two-state model. The higher sticks correspond to events performed in state A and the lower sticks correspond to events performed in state B. Looking at the time series at a larger scale the ratio of B periods shrinks. For the better visibility of the time series the model parameters were changed to βA = 1.5 and βB = 3.

Download figure:

Standard image High-resolution imageThe number of events performed in a single period of state A or B are i.i.d. random variables NA or NB. NA is geometrically distributed with parameter π. The distribution of NB can be easily obtained from p(n), and it is power-law decaying with exponent 1 + ν. Making use of these new random variables the dynamics simplifies: the system executes NA events with interevent times distributed as τA, then switches to the excited state and executes NB events with interevent times τB, then switches back to the normal state, and so on.

It is clear that the decay of the interevent time distribution of the whole process is determined by the smallest of the exponents βA and βB. Since both of and are finite, the periods of state B have a characteristic length . Within the periods of state A there is no characteristic time-scale, hence by looking on the time series at a larger scale the effective length of the B periods will be shorter (figure 5(b))). Therefore the existence of the B periods is irrelevant for the asymptotic decay of the autocorrelation function giving rise to the scaling law.

6.2. A model with long-range dependence

Another simple way to introduce dependence between the interevent times is to construct a Markov-chain, i.e. the next interevent time depends only on the actual interevent time. As a counterexample to the scaling law we constructed a long-range dependent set of interevent times by Metropolis-algorithm. The base of the algorithm is constructing a Markov chain on the integers that has power-law decaying stationary distribution P(x) ∼ x−β. The algorithm uses a proposal density (transition rate) Q(x',xn) which generates a proposal sample from the current value of the interevent time. This sample is accepted for the next value with probability

otherwise the previous value is repeated. To generate a power-law distributed sample with long-range dependence the mixing of the Markov chain should be slow, i.e. the gap in the spectrum of the Markov chain should vanish. In this order we allow only small differences between consecutive interevent times: or equivalently x' as a random variable is discrete uniformly distributed: x' ∼ DU[xn − D,xn + D]. The simulation results (figure 6) show that the autocorrelation function decays slower than it would be assumed from the scaling law (23). This example shows that the scaling relation (23) can be violated if there is long range dependence among the interevent times.

Figure 6. Simulation results of the Metropolis algorithm. The exponent of the interevent time distribution is set to β = 1.5 and parameter D of the proposal density is varied. The dashed line shows the decay corresponding to the scaling law of independent processes.

Download figure:

Standard image High-resolution image7. Extensions of the model

A trivial extension of the model could be putting more items of the observed activity into the list. Then this parameter would tune the frequency of doing the activity. In this case the interevent times become dependent and the simulation results on finite lists show that the interevent time distribution decays faster than power-law but decays slower than an exponential function. It is easy to prove that in spite of this the autocorrelation function (17) is independent of the number of the observed activity and remains power-law decaying. This is another example for models in which the scaling law does not hold.

When the list is finite, we have much more freedom in the choice of the priority distribution. However, the method based on expressing q(n,t) as a probability density function of sum of n − 1 independent geometrically distributed random variables is general, and can offer a good base for further calculations, even for finite lists.

The model may be interesting not only for human dynamics but also for the mathematical theory of card shuffling [21]. We can define the time reversed version of the model in which we choose a position from the same distribution as in the original model and we move the first element of the list to the random position. This model has similar properties to the original model, e.g. this model has the same interevent time distribution and autocorrelation function. If we think of the list as a deck of cards, then the time reversed model is a generalization of the top-in-at-random shuffle method. The latter is a primitive model of shuffling cards: the top card is removed and inserted into the deck at a uniformly distributed random position [21].

8. Conclusion

In a proper model one should take into consideration the dependence among the interevent times besides the interevent time distribution. In human dynamics the latter is slowly decaying and as a consequence of this the autocorrelation function of the interevent times is not well-defined (i.e. the second moment does not exist). However, the autocorrelation function of the time series exists and it might be a good measure for long range dependence among the interevent times. Time series of messages sent by an individual in an online community are reported to be not more correlated than an independent process with the same interevent time distribution [16]. Similarly, the exponents in the neuron-firing sequences approximately satisfy the scaling law (α = 1.0, β = 1.1 [13]). For these systems the model we studied might be applicable. However, this is not always the case. For example, the autocorrelation function of the e-mail sequence decays slower (α = 0.75 [13]) than it should do estimated from the scaling law. This indicates long range dependence in the time series in addition to the power-law decaying interevent time distribution. In this case a dependent model should be applied, for example a model based on Metropolis algorithm that is similar to the one we studied as a counterexample to the scaling law. Effects of circadian patterns and inhomogeneity in an ensemble of individuals should also be considered [22].

In real networks interactions between individuals have to be taken into account to reproduce some social phenomena, e.g. temporal motifs [23] observed in a mobile call dataset. Interactions could be incorporated in this model by allowing the actual activity of an individual to modify the priority list of some of his/her neighbour. If this effect is rare and can be considered as a perturbation, our results on the dynamics of the list could be a starting point to further calculations covering for example information flow in a network.

Acknowledgments

The model discussed here was introduced by Chaoming Song and Dashun Wang. We are grateful to them, László Barabási and Márton Karsai for discussions. This project was partially supported by FP7 ICTeCollective Project (no. 238597) and by the Hungarian Scientific Research Fund (OTKA, no. K100473).

Appendix

A.1. Limit theorem for a geometric series of exponentially distributed random variables

In this section we determine the probability density function of the limit random variable X. In the main text we defined Xn and X as follows:

where ηk ∼ Exp(c) are i.i.d. exponential random variables. The probability density function of sums of independent exponential random variables with different parameters can be expressed [24]. Substituting the proper parameters into that formula one gets

With some trivial algebraic manipulations this can be written in the following form:

The probability density function in the n → ∞ limit reads

The products are usually cited as q-Pochhammer symbols (e−λ,e−λ)s and are convergent in the s → ∞ limit. Equation (A.5) shows clearly that the asymptotic behaviour of the limit random variable X is determined by the first term in the sum.

A.2. Central limit theorem for the power-law decaying case

The Lyapunov condition for the central limit theorem reads

If this condition is fulfilled for any δ, then the central limit theorem can be used. We test this condition at δ = 1 with the following moments:

Substituting these and approximating the sums by integrals yield

A.3. Central limit theorem for the stretched exponential case (ν < 1)

We test the Lyapunov condition (A.6) at δ = 1 similarly to the previous case. The moments are the following:

We make integral approximation of the sums of the moments above:

The last approximation comes from the property that . With these we have

if ν < 1.

Now we give some more details in calculating the β exponent. The mean and variance appearing in the central limit theorem are

and the approximating probability density function is

We introduce a new variable

in the second equation . The dominant term in the Jacobian when t → ∞ is . With these the integral reads

We underlined the terms that determine the decay of the integral for large t.

![$\left \langle \mathbb {E}\left [X(t)\right ]\right \rangle _t=\frac {1}{N}$](https://content.cld.iop.org/journals/1367-2630/15/10/103023/revision1/nj475839ieqn25.gif)

![$\left \langle \mathbb {E}\left [X(t)\right ]\right \rangle _t$](https://content.cld.iop.org/journals/1367-2630/15/10/103023/revision1/nj475839ieqn26.gif)

![$\mathbb {E}\left [\tau _B\right ]$](https://content.cld.iop.org/journals/1367-2630/15/10/103023/revision1/nj475839ieqn32.gif)

![$\mathbb {E} [N_B]$](https://content.cld.iop.org/journals/1367-2630/15/10/103023/revision1/nj475839ieqn33.gif)

![$\xi _t=\mathbb {E} [\tau _B]\mathbb {E}[N_B]$](https://content.cld.iop.org/journals/1367-2630/15/10/103023/revision1/nj475839ieqn34.gif)